ความชื้น เป็นปัจจัยสำคัญที่มีผลกระทบต่อคุณภาพและอายุการใช้งานของอาคาร เนื่องจากความชื้นที่มากเกินไปหรือน้อยเกินไป อาจส่งผลเสียต่ออาคารได้ โดยในอุตสาหกรรมการก่อสร้าง ชุดควบคุมความชื้นถูกนำมาใช้งานในหลากหลายขั้นตอนและกระบวนการ

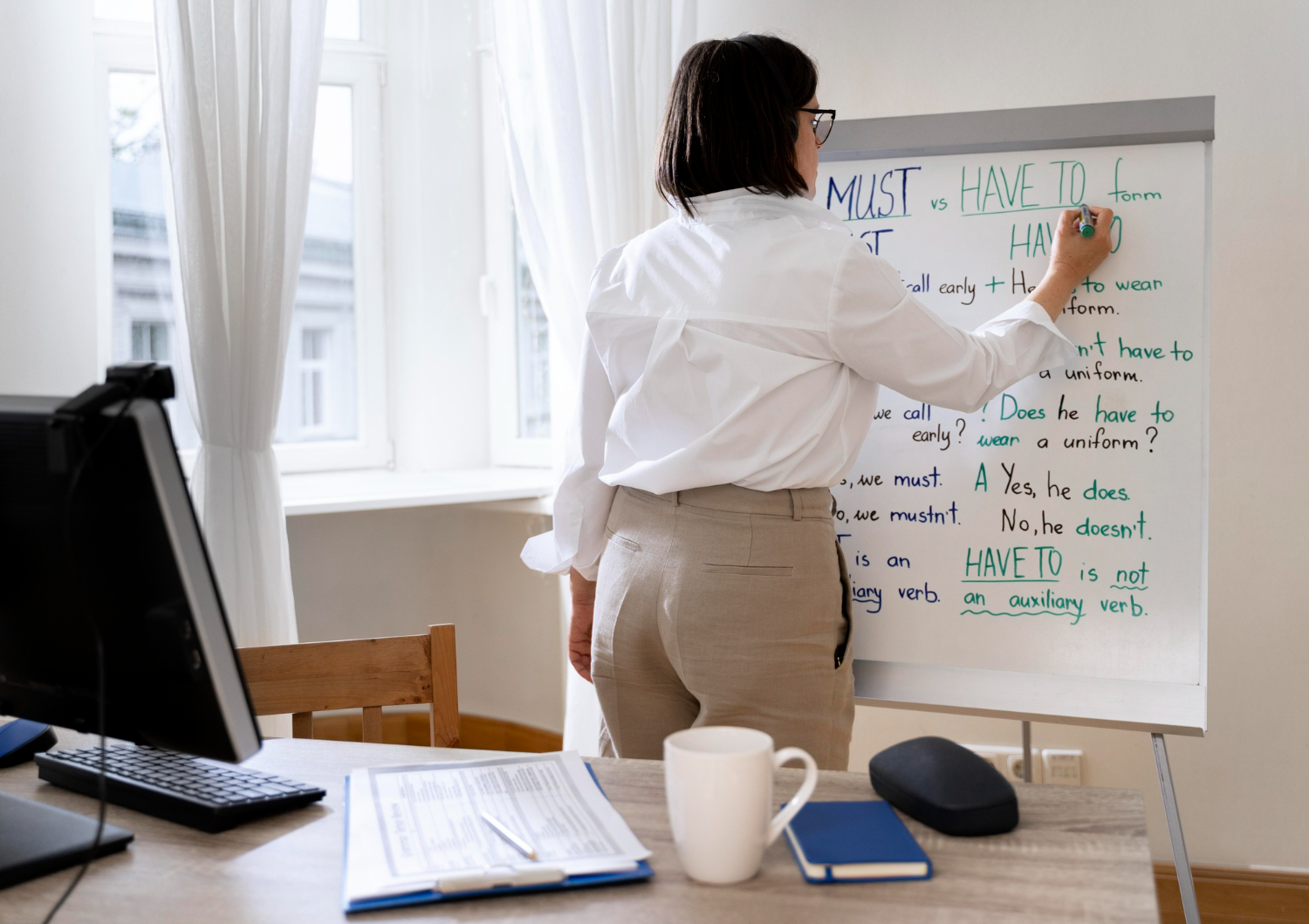

เคล็ดลับในการเลือกครูสอนภาษาอังกฤษตัวต่อตัวเคล็ดลับในการเลือกครูสอนภาษาอังกฤษตัวต่อตัว

การเรียนภาษาอังกฤษตัวต่อตัวเป็นวิธีที่มีประสิทธิภาพในการเรียนภาษา เนื่องจากผู้เรียนจะได้รับความสนใจอย่างเต็มที่จากครูผู้สอน และสามารถเรียนรู้ได้ตรงตามความต้องการและจุดประสงค์ของตนเอง อย่างไรก็ตาม การจะเลือกครูสอนภาษาอังกฤษตัวต่อตัวให้ได้ครูที่ใช่นั้น ไม่ใช่เรื่องง่าย ผู้เรียนควรพิจารณาปัจจัยต่างๆ ดังต่อไปนี้ 1. ระดับความรู้และประสบการณ์ของครู ครูสอนภาษาอังกฤษควรมีระดับความรู้และประสบการณ์ที่เพียงพอที่จะสอนภาษาอังกฤษให้กับผู้เรียนได้อย่างมีประสิทธิภาพ ผู้เรียนควรสอบถามประวัติการศึกษาและประสบการณ์การสอนของครู เพื่อให้มั่นใจได้ว่าครูมีความรู้และประสบการณ์ที่ตรงกับความต้องการของตนเอง 2. สไตล์การสอนของครู ครูสอนภาษาอังกฤษมีสไตล์การสอนที่แตกต่างกัน ผู้เรียนควรเลือกครูที่มีสไตล์การสอนที่ตรงกับความต้องการและความสนใจของตนเอง บางคนชอบครูที่มีสไตล์การสอนแบบสนุกสนานและมีชีวิตชีวา ในขณะที่บางคนชอบครูที่มีสไตล์การสอนแบบเป็นทางการและจริงจัง 3. บุคลิกภาพของครู ครูสอนภาษาอังกฤษควรมีบุคลิกภาพที่ดีที่สามารถสร้างความประทับใจและสร้างความสัมพันธ์ที่ดีกับผู้เรียนได้ โดยเฉพาะ ครูสอนภาษาอังกฤษตัวต่อตัวเนื่องจากจะมีการปฏิสัมพันธ์กันมากระหว่างกัน ผู้เรียนควรเลือกครูที่มีบุคลิกภาพเข้ากับตนเอง เพื่อให้ผู้เรียนรู้สึกผ่อนคลายและสนุกกับการเรียน ไม่กดดัน หรือ ไลฟ์สไตล์ต่างกันมากเกินไป 4.

electric actuator ลักษณะการทำงานเป็นยังไง มีข้อดีอย่างไร electric actuator ลักษณะการทำงานเป็นยังไง มีข้อดีอย่างไร

electric actuator หรือหัวขับไฟฟ้าเป็นอุปกรณ์ที่มีหน้าที่ในการควบคุมการเปิดปิดของวาล์วโดยอาศัยมอเตอร์ไฟฟ้าในการส่งกำลัง ซึ่งได้รับความนิยมอย่างแพร่หลายในวงการอุตสาหกรรมเนื่องจากมีข้อดีทั้งความสะดวกและประหยัดเวลา เรียกได้ว่าไม่มีไม่ได้กันเลยทีเดียว ดังนั้นเพื่อเพิ่มความเข้าใจมากขึ้นวันนี้เราจะพาไปดูลักษณะการทำงาน ประเภท และข้อดีของหัวขับไฟฟ้าว่ามีอะไรบ้าง electric actuator มีลักษณะการทำงานอย่างไร โดยทั่วไป electric actuator หรือหัวขับไฟฟ้ามีลักษณะการทำงาน 2 แบบ คือ 1. Linear การเคลื่อนที่เชิงเส้น 2. Rotary การเคลื่อนที่แบบวงกลมหรือหมุน โดยสามารถเลือกองศาอากาศเคลื่อนที่ได้เริ่มต้นตั้งแต่ 90, 180, 270 ไปจนถึง 360

สายเชื่อมไฟฟ้า คืออะไร มีหน้าที่อย่างไรและสายเชื่อมไฟฟ้า มีกี่แบบ สายเชื่อมไฟฟ้า คืออะไร มีหน้าที่อย่างไรและสายเชื่อมไฟฟ้า มีกี่แบบ

สายเชื่อมไฟฟ้า หลายคนเคยพบเห็นผ่านตามาแล้ว สังเกตได้จากตอนที่ช่างเชื่อมโลหะกำลังทำงาน สายเชื่อมไฟฟ้า มีหน้าที่นำกระแสเชื่อม จากเครื่องเชื่อมไปยังบริเวณอาร์ก สายเชื่อมไฟฟ้าที่ใช้กัน มีสองสาย ได้แก่ สายดินและสายเชื่อม สายเชื่อมจะต่อไว้กับตัวจับสายเชื่อมและสำหรับปลายสายดินจะต่อเข้ากับที่จับงานเชื่อม สายเชื่อมทำจากอะไร โดยปกติแล้วทำจากลวดทองแดงเส้นเล็กจำนวนมากเพื่อให้สายเชื่อมไฟฟ้าโค้งงอได้ สะดวกต่อการทำงานภายนอกของสายเชื่อมจะหุ้มด้วยยางฉนวนหรือพีวีซี สายเชื่อมไฟฟ้า มีกี่ชนิด สายเชื่อมไฟฟ้า สีดำ ผลิตจากยางสังเคราะห์คุณภาพดี สามารถทนต่อความร้อนได้สูง ประมาณ 65-70 องศาเซลเซียส ไม่เปื่อยง่าย ทนต่อการเสียดสี หรือการลาดไปมาในสถานที่ปฏิบัติงาน สายเชื่อมไฟฟ้าสีดำนี้ได้รับความนิยมใช้ในโรงงานอุตสาหกรรมหนักที่มีอุณหภูมิสูง หรือจะเป็นการใช้งานกลางแจ้ง งานซ่อมในอู่ต่อเรือ สายเชื่อมไฟฟ้า

EPC มีลักษณะเป็นอย่างไรบ้าง มาทำความรู้จักกันเถอะ EPC มีลักษณะเป็นอย่างไรบ้าง มาทำความรู้จักกันเถอะ

การก่อสร้างโรงไฟฟ้าต่าง ๆ จำเป็นต้องมีผู้ให้บริการที่น่าเชื่อถือ การทำงานเกี่ยวกับไฟฟ้าเป็นสิ่งที่ต้องระวังสูง ฉะนั้นจึงต้องอาศัยความเชี่ยวชาญเป็นอย่างมาก EPC เป็นอีกหนึ่งผู้บริการที่เชื่อถือได้และประกอบด้วยทีมงานมืออาชีพ โดยจะมาพาให้รู้จักในบทความนี้ว่ามีลักษณะแบบไหนบ้าง EPC ครอบคลุมการบริการด้านวิศวกรรม ผู้ให้บริการก่อสร้างโรงไฟฟ้ามีอยู่หลากหลายแห่งในปัจจุบัน โดย EPC ถือว่าสามารถก่อสร้างโรงไฟฟ้าและบริการด้านวิศวกรรมได้อย่างตรงจุด มีหลัก Pre-Construction, Execution และ After-Construction เป็นองค์ประกอบ มีหน้าที่จัดหาอุปกรณ์ เครื่องจักร ติดตั้ง ก่อสร้าง ตั้งแต่เริ่มต้นไปจนจบโครงการ เหมาะกับโครงการขนาดเล็กถึงขนาดใหญ่ EPC มีการทำงานอย่างเป็นระบบ เริ่มที่ศึกษาความคุ้มค่าของการลงทุน ดูว่าเกิดผลกระทบใดบ้างต่อสิ่งแวดล้อม

การบริหารการจัดการสินค้าคงคลังโดยระบบอัตโนมัติดีอย่างไรการบริหารการจัดการสินค้าคงคลังโดยระบบอัตโนมัติดีอย่างไร

ในปัจจุบันเราอยู่ในยุคที่เทคโนโลยีและระบบอัตโนมัติได้กำลังสร้างผลกระทบใหญ่ในทุก ๆ ภาคส่วน โดยเฉพาะในอุตสาหกรรม ความสามารถในการเพิ่มประสิทธิภาพและผลประกอบการอย่างมากการจัดการสินค้าคงคลังสามารถใช้ได้กับในทุก ๆ ภาคส่วน แต่ส่วนที่ใช้ระบบนี้ได้อย่างมีประสิทธิภาพออกมาดีเช่นโกดังเก็บของ โดยเฉพาะ โกดังให้เช่าขอนแก่น นั้นมีโกดังมากมายที่มีการจัดการสินค้าคงคลังอย่างเป็นระบบ เมื่อเราลองสังเกต เราจะเห็นว่าประโยชน์จากการใช้ระบบการจัดการสินค้าคงคลังอัตโนมัตินั้นมีประสิทธิภาพมาก และกระบวนการของระบบที่ช่วยอำนวยความสะดวกคือการรวบรวมข้อมูลอัตโนมัติ เพราะช่วยลดการใช้แรงงาน การดำเนินงานที่ซับซ้อน และยังสามารถลดข้อผิดพลาดที่เกิดจากการทำงานจากมนุษย์ โดยทำให้เราสามารถได้รับข้อมูลปริมาณสินค้าที่ถูกต้องและอัปเดตเป็นประจำ และได้ข้อมูลที่ถูกต้องเพื่อนำมาวางแผนต่อไป ด้วยการนำเข้าระบบการจัดการสินค้าคงคลังอัตโนมัติ ธุรกิจที่ตามหาโกดังให้เช่าขอนแก่นสามารถเพิ่มคุณภาพของภาคการดำเนินงานทางธุรกิจตัวเองด้วย เพราะลดความยุ่งยากและปัญหาในการจัดระเบียบสินค้า และใช้ทรัพยากรอย่างมีประสิทธิภาพมากขึ้น และมีผลเกี่ยวเนื่องไปจนถึงการทำกำไรสุทธิของธุรกิจและมอบความคุ้มค่าการค้าที่ดีกว่าให้กับลูกค้า แนวทางการจัดการสินค้าคงคลังผ่านระบบซึ่งเป็นกลไกสำคัญอย่างหนึ่งในการเติมสต๊อกอัตโนมัติ ซึ่งเป็นการเปลี่ยนแปลงกลไกการทำงานด้วยระบบเดิม ๆ โดยระบบนี้จะจัดการปรับยอดสินค้าในคลังสินค้าแบบอัตโนมัติเมื่อสินค้าได้เติมเข้าสู่ระดับสินค้าคงคลัง ทำให้มั่นใจได้ว่าสินค้าจะไม่หมดสต๊อกและสามารถเตรียมหาสินค้ามาเติมได้ตลอดเวลาโดยไม่ทำให้กระบวนการทำงานนั้นชะงัก หากเราเจาะลึกลงไปในวิธีการเติมสินค้าด้วยระบบอัตโนมัติที่ใช้งานในโกดังให้เช่าขอนแก่นแล้ว

ความคุ้มครอง ประกัน 3 ราคาถูก คุ้มค่า คุ้มราคาที่สุดความคุ้มครอง ประกัน 3 ราคาถูก คุ้มค่า คุ้มราคาที่สุด

ถ้าวันนี้คุณสนใจที่จะเลือกซื้อรูปแบบความคุ้มครองประกันรถยนต์แต่ยังตัดสินใจไม่ได้ว่ารถยนต์ของคุณนั้นเหมาะสำหรับเลือกซื้อรูปแบบประกันรถยนต์ประเภทใดดีวันนี้เราเข้ามาทำความรู้จักกับประกัน 3 ราคาถูกกับเว็บรู้ใจของเราเรามีหลากหลายรูปแบบความคุ้มครองให้คุณได้เลือกซื้อโดยเฉพาะประกัน 3 ราคาถูก ซึ่งอย่างที่ทราบว่าเป็นรูปแบบประกันที่มีราคาถูกที่สุดสำหรับรถยนต์ที่มีอายุการใช้งานสูงหรือรถยนต์ที่จอดทิ้งไว้ไม่ค่อยได้ใช้งานเลือกซื้อเพียงประกัน 3 ราคาถูกนี้ก็รับก็ตอบโจทย์และช่วยเพิ่มเกราะป้องกันเพิ่มความคุ้มครองให้กับผู้ขับขี่ได้มากแล้ว การเลือกซื้อรูปแบบประกันรถยนต์ไม่จำเป็นจะต้องเป็นรูปแบบประกันรถยนต์ที่มีราคาแพงที่สุดและให้ความคุ้มครองมากที่สุดแต่ควรเลือกซื้อรูปแบบประกันรถยนต์ที่ตอบโจทย์การใช้งานอย่างเหมาะสมเพื่อป้องกันการเลือกซื้อรูปแบบประกันรถยนต์ที่แพงจนเกินไปและทำให้เกิดปัญหาทางการเงินตามมา เลือกซื้อความคุ้มครองจาก ประกัน 3 ราคา กับเว็บออนไลน์ รู้ใจ ในวันนี้สำหรับคนที่กำลังมองหารูปแบบประกัน 3 ราคาถูกแต่ยังตัดสินใจไม่ได้ว่าจะเข้าไปเลือกซื้อรูปแบบประกันรถยนต์ประเภทนี้กับเว็บไหนดีคุณสามารถเข้ามาทำความรู้จักและเลือกซื้อประกัน 3ราคาถูกกับเว็บรู้ใจของเราได้เพื่อที่จะช่วยทำให้ผู้เอาประกันมีเกราะป้องกันในการใช้ชีวิตบนท้องถนนได้เพิ่มมากขึ้นกว่าเดิม เนื่องจากรูปแบบประกัน 3 ราคาถูกของเว็บวิจัยนี้ลูกค้าสามารถเข้ามาดูรายละเอียดเบื้องต้นเกี่ยวกับความคุ้มครองข้อยกเว้นความคุ้มครองรวมถึงเงื่อนไขในการเลือกซื้อประกัน 3ราคาถูกกับเว็บรู้ใจก่อนได้เพื่อช่วยทำให้คุณพิจารณาเลือกซื้อรูปแบบความคุ้มครองประกัน 3 ราคาถูกได้ง่ายมากขึ้น หรือถ้าคุณยังไม่มั่นใจกับแหล่งเรื่องซื้อประกันภัยเว็บใจวันนี้ก็ลองเข้ามาทำความรู้จักกับเว็บรู้ใจของเราเพิ่มเติม เพื่อที่จะช่วยทำให้คุณมั่นใจได้ว่าหากคุณเลือกซื้อรูปแบบความคุ้มครองกรมธรรม์ประกัน 3 ราคาถูกกับเว็บรู้ใจ คุณจะได้รับรูปแบบความคุ้มครองที่ดีที่สุดและเหมาะสมกับการใช้งานของผู้เอาประกันมากที่สุด

ธุรกิจเป็นสิ่งที่หลายๆคนก็จะเลือกให้ความสนใจธุรกิจเป็นสิ่งที่หลายๆคนก็จะเลือกให้ความสนใจ

ในปัจจุบันนี้เรื่องของธุรกิจนั้นก็เป็นเรื่องหนึ่งที่ดีและสำคัญกับเราเองอย่างที่สุดเนื่องจากว่าในเรื่องของธุรกิจนั้นก็เป็นสิ่งที่หลายๆคนก็อยากที่จะสร้างเพราะว่าเราไม่จำเป็นต้องเป็นลูกน้องใคร เราเองจึงควรที่จะต้องรู้จักที่จะดูแลในเรื่องของธุรกิจของตนเองที่เราจะได้ทำนั้นก็จะยิ่งเป็นเรื่องที่ดีอย่างที่สุด เพราะทุกๆอย่างในเรื่องของธุรกิจนั้นก็เป็นสิ่งที่เราเองก็จะต้องยิ่งให้ความสำคัญที่สุดเพราะว่าในการทำธุรกิจนั้นเราเองก็จะต้องเลือกที่จะหาความรู้ให้มากๆด้วยเพราะยิ่งเราได้หาความรู้ได้มากเท่าไหร่ก็จะยิ่งดีกับเราเองอย่างยิ่งด้วย สิ่งเหล่านี้เราเองจึงควรที่จะต้องอย่ามองข้ามเลย อะไรที่จะช่วยทำให้ธุรกิจของเรานั้นมีความเติบโตได้ก็จะยิ่งดีเพราะว่าทุกๆอย่างในเรื่องของธุรกิจนั้นจะยิ่งเป็นเรื่องที่ดีอย่างมากเพราะว่าถ้าหากเรารู้จักที่จะเลือกทำธุรกิจแล้วนั้นก็จะต้องให้ความสนใจและความตั้งใจให้มากๆก็จะยิ่งดีกับเราเองอย่างยิ่งเพราะถ้าหากเรารู้จักที่จะยิ่งให้ความสนใจแล้วจะดีกับเราเองอย่างที่สุดด้วย เพราะในเรื่องของธุรกิจนั้นต้องบอกเลยว่าเราจะต้องหาติดตามข่าวสารให้มากๆจะยิ่งดีอย่างมากเพราะถ้าหากเรารู้จักที่จะหาข้อมูลหรือติดตามในเรื่องของธุรกิจได้แล้วนั้นอะไรที่ดีๆก็จะยิ่งเกิดขึ้นกับเราเองอย่างที่สุดด้วย เรื่องของธุรกิจต้องบอกเลยว่าเป็นสิ่งที่จำเป็นที่เราจะยิ่งมองข้ามไม่ได้เลย หากเรารู้จักที่จะให้ความใส่ใจกับเรื่องของการทำธุรกิจแล้วจะยิ่งดีต่อตัวเองเพราะเราก็อาจจะยิ่งได้กำไรที่มากขึ้นได้อีกด้วย เรื่องนี้จึงถือว่าเป็นเรื่องหนึ่งที่ดีงามอย่างยิ่งเพราะถ้าหากเราสนใจในเรื่องของการทำธุรกิจแล้วนั้นจะยิ่งดีกับเราเองอีกด้วย อะไรที่จะช่วยทำให้เรานั้นได้มีธุรกิจที่ดีและเติบโตยิ่งขึ้นได้ก็ควรที่จะต้องหาข้อมูลไว้มากๆจะดีต่อเราที่สุด และทุกๆอย่างในเรื่องของการทำธุรกิจนั้นไม่ใช่เรื่องที่ง่ายเลย และถ้าหากเรารู้จักที่จะจับจุดได้แล้วกับเรื่องของธุรกิจก็จะยิ่งเป็นสิ่งที่ดีกับเราเองอย่างที่สุดด้วย เรื่องเหล่านี้เราก็จะต้องให้ความสำคัญและเก็บเกี่ยวความรู้ให้ได้มากที่สุดจะได้เกิดเรื่องราวที่ดีๆให้กับตัวเราเองด้วย อะไรที่จะช่วยทำให้เรานั้นเป็นคนที่มีความสุขก็จะยิ่งต้องทำเพราะอย่างน้อยถ้าหากธุรกิจของเรานั้นยิ่งได้เติบโตแล้วก็จะดีต่อตัวเราเองอย่างที่สุดด้วย

AI คืออะไร ระบบที่สำคัญกับธุรกิจของคุณAI คืออะไร ระบบที่สำคัญกับธุรกิจของคุณ

ในช่วงหลายปีที่ผ่านมา เชื่อว่าหลายคนคงจะเคยติดตามเรื่องราวในแวดวงเทคโนโลยีธุรกิจกันมาบ้างแล้วในเรื่องของการใช้ AI หรือปัญญาประดิษฐ์เข้ามาบริหารงานให้มีความสะดวกสบาย และรวดเร็วมากยิ่งขึ้น สำหรับใครที่ยังทราบว่าระบบ AI คืออะไร แล้วมันมีความเกี่ยวข้องอะไรกับวงการธุรกิจ วันนี้เราจะมาเปิดเผยให้ทุกคนได้รับทราบกัน และสำหรับใครที่กำลังทำธุรกิจอยู่ละก็ รับรองได้ว่าสิ่งนี้คือสิ่งที่จะเปลี่ยนวิธีการทำธุรกิจของคุณได้เลยละ ความหมายของระบบ AI ที่คุณต้องรู้ สำนักงานพัฒนารัฐบาลดิจิทัล ได้นิยามความหมายของระบบ AI เอาไว้ว่า Artificial Intelligence เทคโนโลยีที่สร้างความสามารถให้กับเครื่องจักร และคอมพิวเตอร์ ด้วยอัลกอริทึมและเครื่องมือทางสถิติ เพื่อสร้างซอฟต์แวร์ที่ทรงปัญญา ที่สามารถเรียนแบบความสามารถของมนุษย์ได้เช่นการแยกแยะ ตัดสินใจ การคาดการณ์ สื่อสารกกับมนุษย์ และบางกรณีอาจถึงขั้นเรียนรู้ได้ด้วยตนเอง

สาขาการเรียนด้านไอทีที่สามารถตอบโจทย์การทำงานในอนาคตสาขาการเรียนด้านไอทีที่สามารถตอบโจทย์การทำงานในอนาคต

เด็กคนไหนที่สนใจเรียนและทำงานในสายงาน IT ซึ่งเป็นอาชีพที่ตลาดโลกต้องการเป็นอย่างมาก และถือได้ว่าเป็นอาชีพที่สำคัญอย่างยิ่งต่อการทำงานทุกสาย ในบทความนี้จะมาแนะนำสาขา IT (Information Technology) ที่ตอบโจทย์การทำงานในอนาคตว่ามีอะไรบ้าง สาขาไอทีที่นำไปใช้ทำงานในอนาคต สำหรับวิชาที่เกี่ยวข้องกับงานไอทีนั้นจะมีอยู่หลายสาขาให้เลือกเรียนมากมายในปัจจุบัน โดยจะสามารถกล่าวอย่างคร่าว ๆ ได้ดังต่อไปนี้ สาขารับตรงด้าน IT เกือบทุกประเภท ที่สาขานี้คุณจะได้เรียนการเขียนโค้ด (coding) การพัฒนาโปรแกรมคอมพิวเตอร์ รวมถึงอุปกรณ์ในส่วน Network Software and Hardware อาชีพที่คุณสามารถทำได้หลังเรียนจบแทบจะเป็นทุกอย่างในสายงานไอที เช่น นักวิเคราะห์ นักออกแบบโปรแกรม และโปรแกรมเมอร์